Active learning under variational inference.

Msc in Computer Science.

Outcome: Graduated

Summary

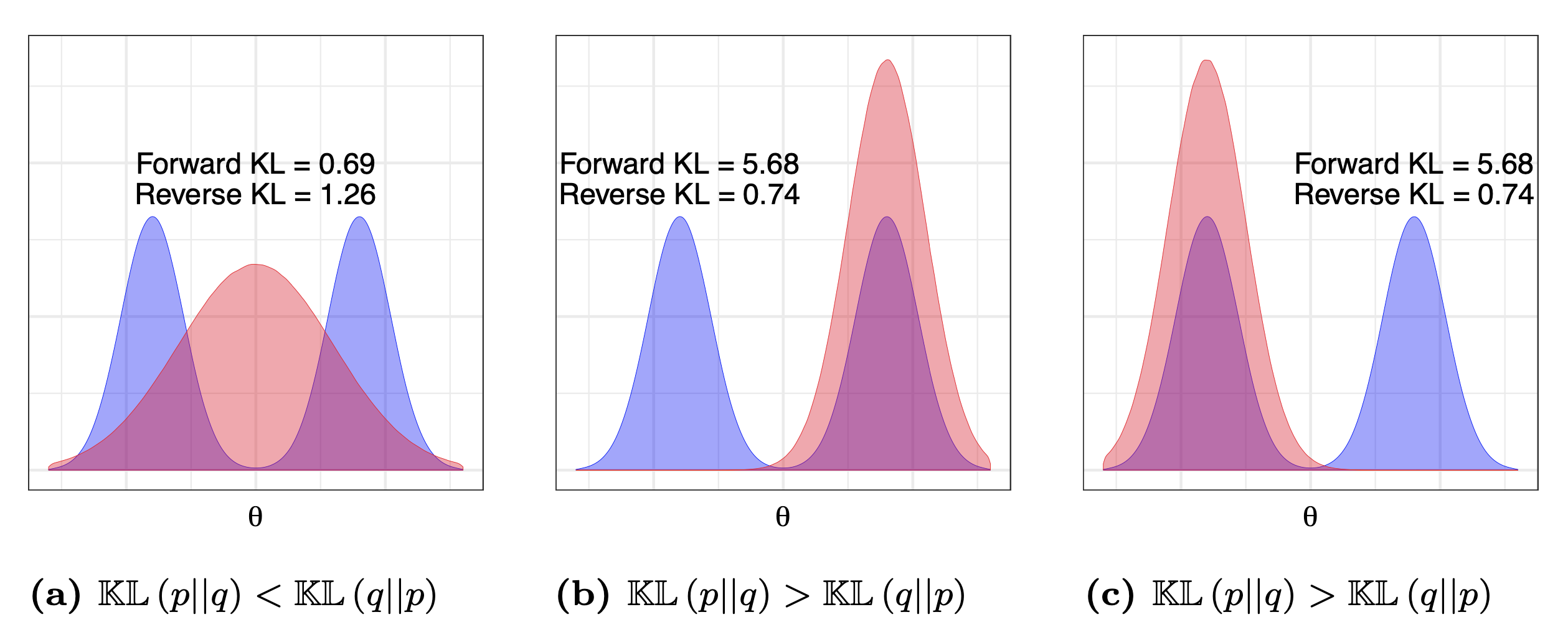

In this work an approximate Bayesian approach for Deep Learning is compared with a conventional approach, all within a context of Active Learning. The conventional approach is based on the optimization of a loss function, which results in a single point estimate with no measure of uncertainty. An approximate Bayesian method is used for computational feasibility.

Contributions

A comparison is made under three datasets in a computer vision setting where labeled data points are actively chosen. The results show that choosing points under the model uncertainty is better than choosing at random. However, there is no evidence that favors a variational approximation over the frequentist methodology.

- Tags

- Variational inference, Machine Learning